Visual Thinking Lab

We study visual thinking:

how it works, and how

educaton + design can

make it work better.

We study visual thinking:

how it works, and how

educaton + design can

make it work better.

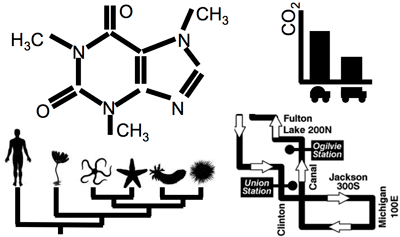

Both STEM education and broader scientific reasoning rely on spatially depicted relations. These depictions include bar graphs, line graphs, histograms, cladograms, timelines, chemical models, flowcharts, maps, and mechanical drawings (see Figure 1 for examples). Spatial depictions can be an extremely efficient way to present information (e.g., Tversky, 2005), but many students struggle to understand them (e.g., Kozhevnikov, Motes & Hegarty, 2007; Shah and Carpenter, 1995).

To find out why some students struggle, and find inspiration for measures that could improve their performance, we must seek to understand how the human visual system processes spatial relations among objects. Past work reveals many aspects of this process, including the types of reference frames that can underlie these relations, or how people deal with real or imagined transformations to relations (e.g. a change in viewpoint) (Shelton & McNamara, 2001). Beyond such themes, it is striking how little we know about the mechanism used by our visual system to process spatial relationships. For each of these depictions in Figure 1, we have little idea how the visual system represents arbitrary spatial relationships among the several objects or parts. But our understanding is actually far more impoverished. We have little idea how we flexibly represent the relations between just two objects. Before we can approach the larger puzzle of how structure is represented among several objects, we must first solve this more basic problem so that we know the basic units that comprise that structure.

We have started to answer this question by assembling a taxonomy of mechanisms that the visual system might use to process spatial relations. We have divided these possibilities into two major classes that differ according to how the objects in a relation are attended. One class requires that we attend simultaneously across objects, and the other requires that attention shift to at least one object over time. We argue for the existence of this novel latter class, where we must isolate one object at a time. This step is needed to solve a series of well-known problems in vision, involving matching object identities to their locations.

As a practical example, when you see your salt and pepper shakers on your dining table, you feel that you can pay attention to both objects simultaneously, and still know which is on the left or right. We predict that this percept is an illusion. Instead, in order to know that the salt is on the left, you need to shift your attention exclusively to the salt.

Evidence for attentional shifts during spatial relationships judgments

To demonstrate that people shift their attention within the simplest spatial relationship judgments, we use eyetracking (Roth & Franconeri, 2012), an electrophysiological "attention tracker", and other behavioral techniques (Roth & Franconeri, 2012) to track movements of attention. Across these studies, we show that during simple judgments of spatial relationships between just two objects (e.g. left-right decisions), people reliably shift attention toward one object (strategies vary across tasks, but one example is a tendency to shift to the left, as we do when reading a new line from a book).

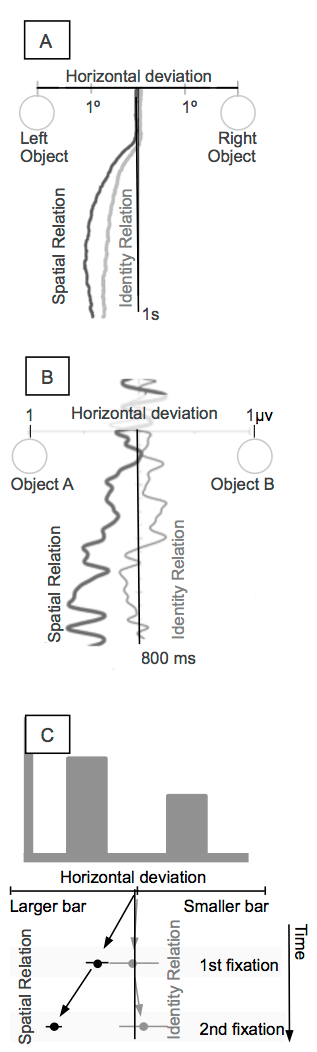

Figure 2a shows an example using eyetracking. During spatial relationship judgment between two objects (participants were asked to encode the left-right relationship of a red and green circle), participants reliably looked at the left object despite the ease of the task. Figure 2b shows an identical pattern using our electrophysiological "attention tracker". Even when participants keep their eyes perfectly still (confirmed by an eyetracker), during a simple spatial relationship judgment they still systematically shift attention to one of the objects (it's not the left one for this case, for methodological reasons we needed to seek different types of systematic shifts).

In each case, participants shifted their eyes or attention during spatial relationship judgments. But perhaps they'd do this for any judgment. As a control, we tested a second judgment that also required inspecting both objects, but removed the spatial component of the relation. In the 'identity relation' control, participants indicated whether the object colors were the same or different. Figure 1 shows that eliminating the spatial component significantly reduced the shift. In the eyetracking case of Figure 1a, there was still some evidence of a shift, but in this experiment we did not actively discourage participants from incidentally encoding the spatial relation, even if it did not help their performance. For the "attention tracker" case of Figure 1b, we added new incentives for observers to ignore the spatial relation in the identity relation condition, and the shifts completely disappeared.

Relational processing in graphs

We have begin to extend our hypotheses and methods to the perception of bar graphs, and we have found that extracting relations from bar graphs also requires shifts among individual bars (Parrott, Uttal, & Franconeri, in preparation; Parrott, Uttal, Shah, Maita, & Franconeri, in preparation). Figure 1c shows an eyetracking study where participants either judged the spatial relation within a 2-bar graph ("Does the graph depict [oO] or [Oo]?"), or an identity relation control ("Are the bars of equal size or not?"). The results mirrored previous experiments, where spatial relation judgments brought eye movements toward one bar (this time, it was toward the larger bar), but again this effect disappeared for identity relation judgments.

Conclusions

We predict that shifting attention is a critical part of constructing visual representations of spatial relations, and that the way that these shifts of attention unfold over time will critically influence understandings of the relations within both simple and complex graphs. Our new work along this line extends to children and to the development of computer-based interventions to facilitate the development of relational processing in graphs, seeking new ways to teach students the ‘perceptual’ skills for interpreting graphically presented information.

References

Roth, J. & Franconeri, S. L. (2012). Representations of spatial relationships may be asymmetric for both language and vision. Frontiers in Cognition, 3, 464. ([pdf]).

Kozhevnikov, M., Motes, M., Hegarty, M. (2007) Spatial visualization in physics problem solving. Cognitive Science, 31, 549-579.

Shah, P., & Carpenter, P. A. (1995). Conceptual limitations in comprehending linegraphs. Journal of Experimental Psychology: General, 124, 43-61.

Shelton, A. L. & McNamara, T. P. (2001). Systems of spatial reference in human memory. Cognitive Psychology, 43(4), 274-310.

Tversky, B. (2005). Visualspatial reasoning. In K. Holyoak and R. Morrison, (Eds), Handbook of Reasoniong (pp 209-249). Cambridge: Cambridge University Press.

Figure 1: The molecular diagram represents spatial relations among atoms. Cladograms depict phylogeny as spatial relations. The bar graph conveys the message that taking a bus to school requires less CO2 per student than taking a car, and understanding this message requires determining the relation between the bars. A subway map represents real-world spatial structure in abstracted form.

Figure 2: Each graph depicts the lateral position of attention on the x-axis, and time on the y-axis, with the task starting at time 0. Participants are asked to encode either the left/right spatial relation between two objects (or the trend in a graph), or to identify (same/different) relation between those same objects. The top graph (a) shows lateral eye movements for each task, (b) shows lateral attentional movements with fixed eye poisition, and (c) shows eye movements within a 2-bar graph judgment.